Press Photo

"Signals" by John Stanmeyer.

Final Installation

What I Learned

Through this project I learned a lot about the following things

- Blender (MoCap and iFacialCapture)

- Rendering

- Storytelling

- Problem Solving

Blender was probably the thing I learnt most about. I had never used the software before but in the span of three weeks, I had learnt how to construct, sculpt, add and edit textures, animate, and render. I also learned how to add motion capture files and link them to armatures in the models I had created. I also learned about apps you can use, in particular iFacialCapture. I really felt I learned a lot about research while looking for this app as well as installing plug-ins and how plug-ins in blender work.

Storytelling is something I am very strong at but even then, through this project I learnt a lot about when is too much, and when is too little. I really learned how to make sure to include just enough that you can create a surprise without it making no sense/coming out of nowhere.

We came across many problems in this project. No budget, Touchdesigner issues, rendering problems in multiple softwares. I really had to work on my patience and coming up with new ways to do things.

Week 1

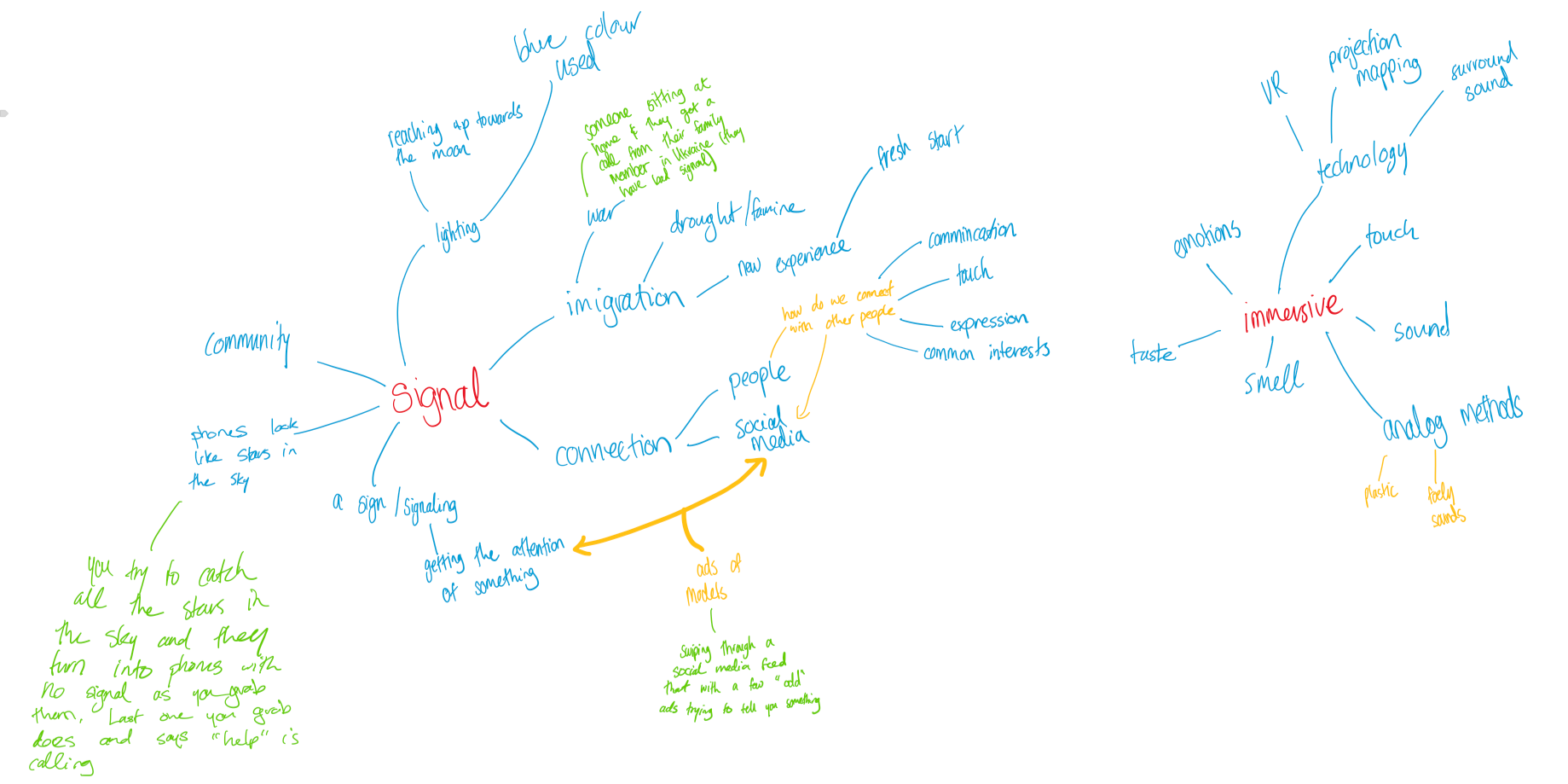

This was the initial investigation I did into the image. I started by me taking the image into Procreate and marking things I noticed straight away in the image

After this, we created a personal generalised mind map for ideas for the installation. I came up with a few ideas but nothing I was super excited about.

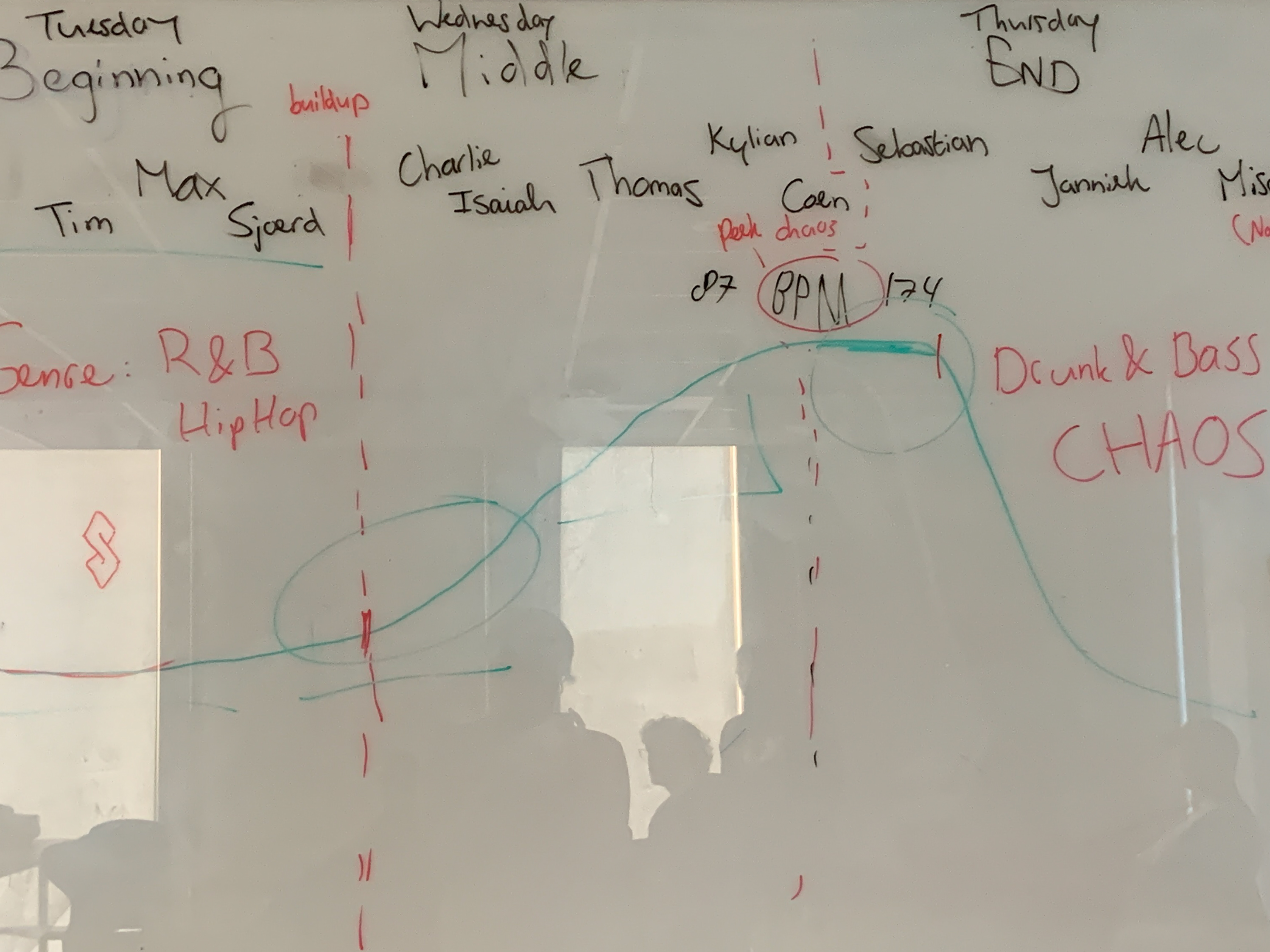

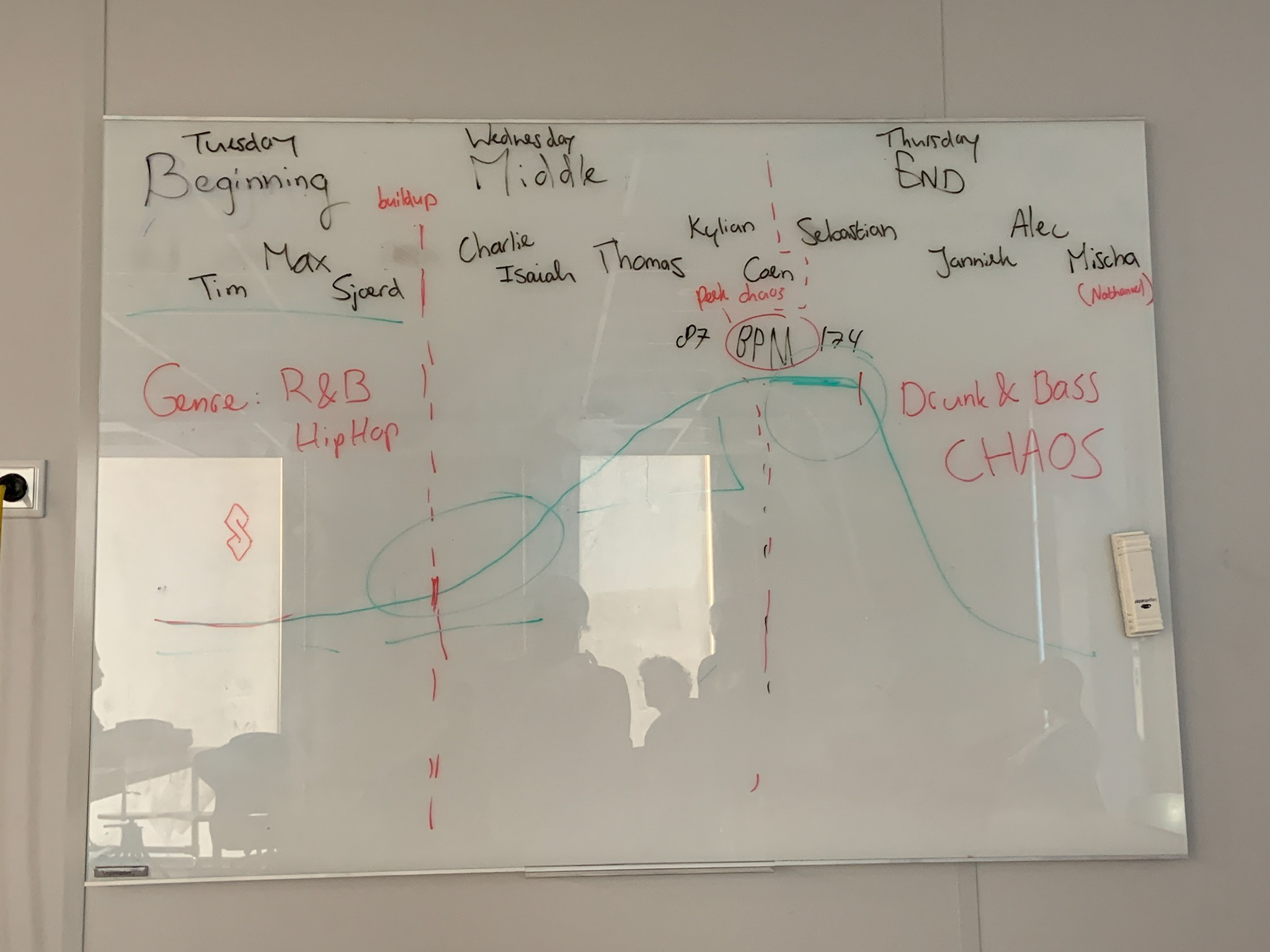

We then had a group discussion where we realised the common theme was using phones in some kind of way as we all agreed that even when we are not on them, we are receiving and sending out signals. We also considered using sensors so that when people entered the installation a static noise would play. By the end of our discussion we had things we liked but nothing concrete. We decided to work on individual ideas over the weekend and talk again on Sunday morning

We then had an online meeting today to try and focus on what our story and idea would be (notes can be found to the left). We went back to the idea of the phone and tried to see what we could create using them. We decided to simple down the use of phones so that they would just be used to send a text by the audience.

We then had to work out, what would you be sending a text to. We had previously considered using an AI image generator and projection mapping but felt like we could do something better. This led us to take inspiration from sources such as an exhibition from Harriet Davey in the NXT Museum that we took in college, and the video game, "Detroit Become Human." We were going to create an AI-like figure using Blender and Motion capture. Through the installation, the audience would have a conversation with the AI via choosing options on their phone that will alter the interactions and story with the AI

I offered to take on the blender. I don't have a lot of experience with this software however I was willing to learn and felt like it would help expand my skills

We then had another in class meeting, we discussed how we would make texting work. We realised we would not be able to do this with the time and skills we had. We looked into other ideas such as standing in certain places and such but we eventually came up with the idea that we would put a microphone in front of the audience and prompts of what to say into the mic on screen. This mic isn't connected to anything, however, but we are listening behind the screen and using Touch Designer to make the videos relevant to the response to play.

We also worked on the story and decided that you would be a person reaching out for help on how to help your friend/co-worker. You then can pick between two friends, Anne and Mark, and go through their story. The AI will offer advice on how to help at the end.

I created this playlist on youtube with videos I found that helped me to learn how to use the basics of Blender, motion capture, and facial capture. I found them extremely helpful and was able to take what I had learnt from this to create a 3D model of an AI I liked.

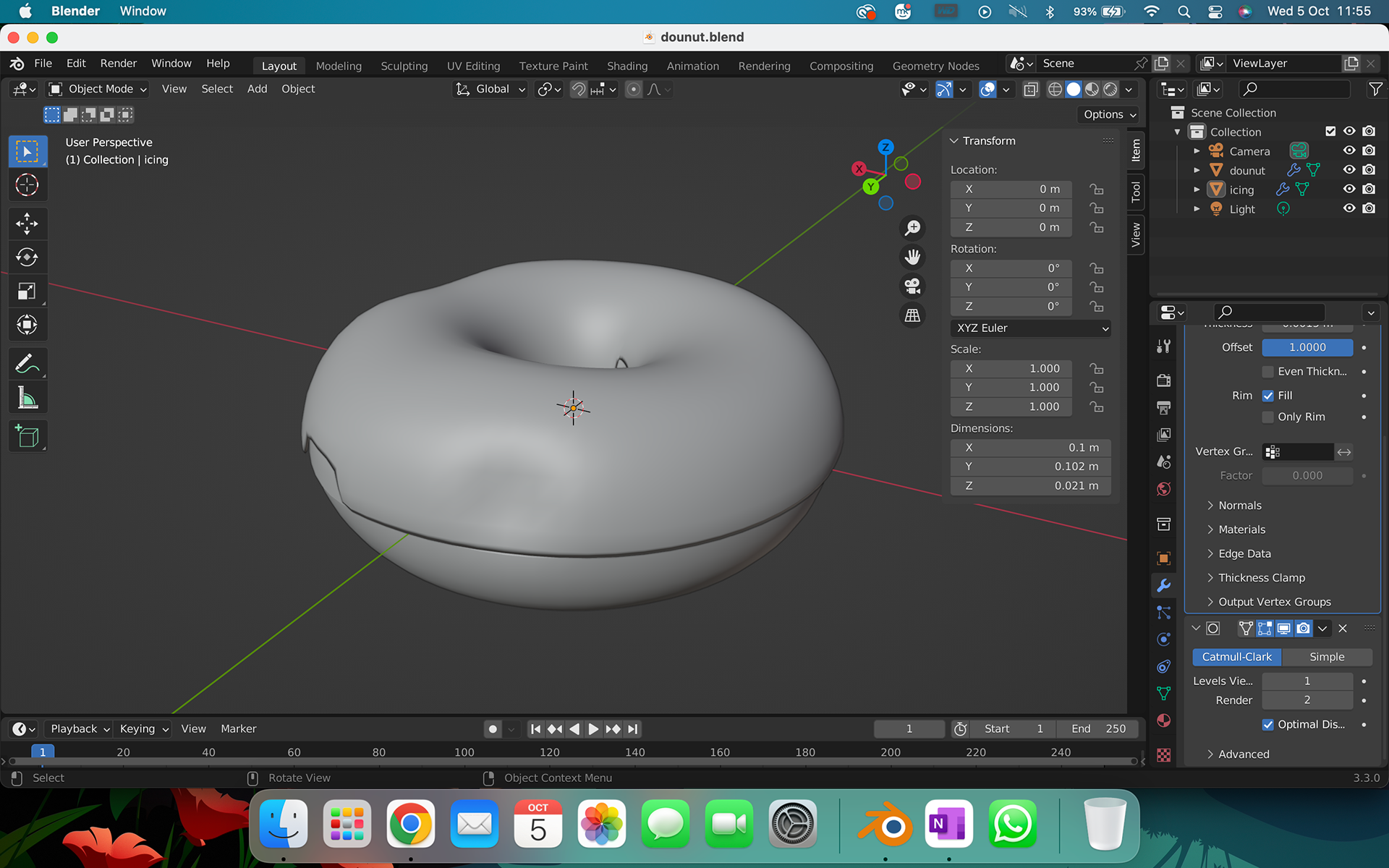

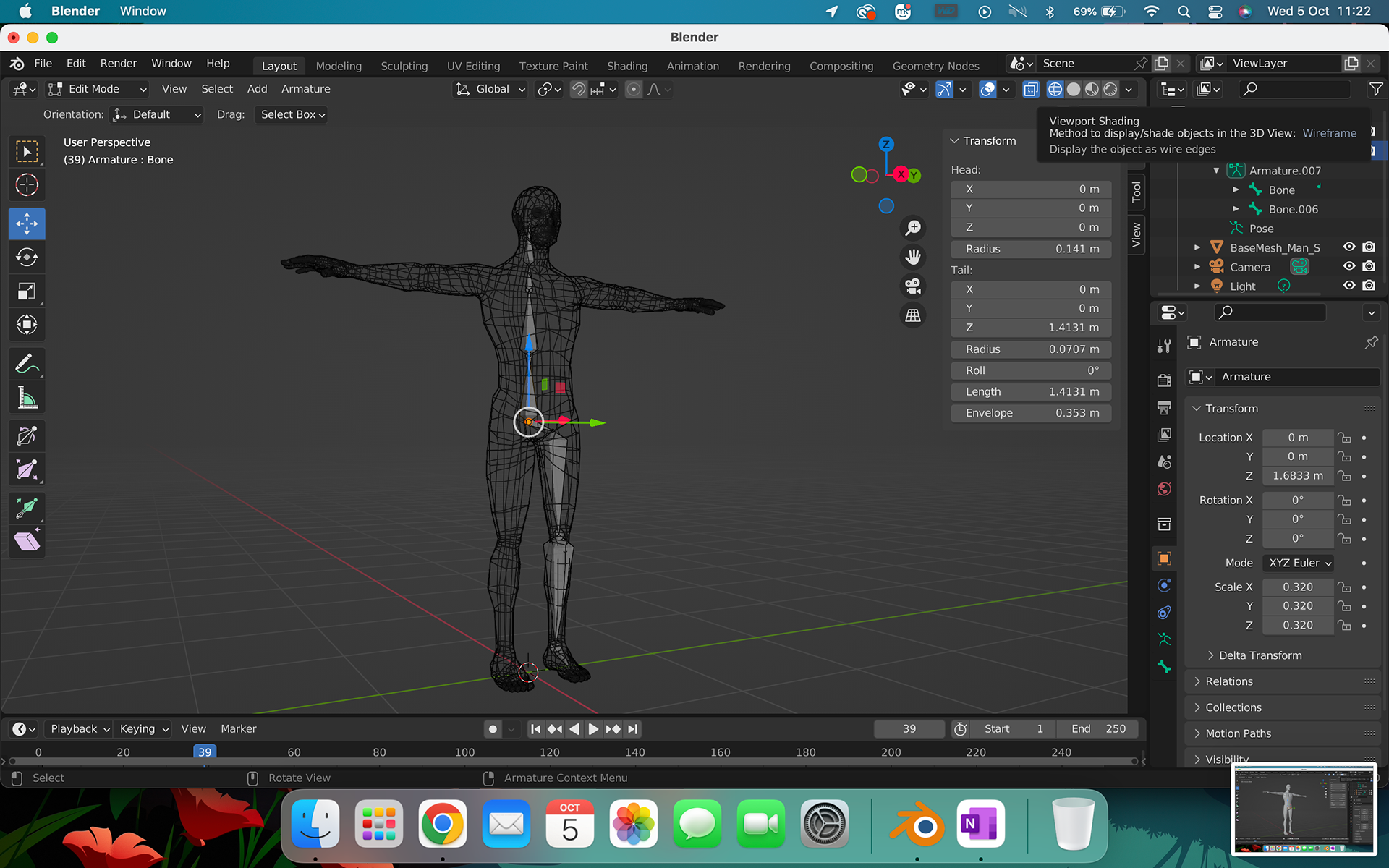

As you can see from the photos below, I learned how to sculpt and build a doughnut and human form in Blender, I also learned how to build an armature which was the easier and most difficult as you have to have the placement of the model very exact as well as trying to hide the armature in the model correctly

Started working on motion capture because originally we wanted the AI to walk into the scene. This did not end up happening however, as we did decided to go with a head and shoulders instead of a full body. I decided to continue learning this skill however so that I could use it in the future for other projects.

As you can see below I used an app called MoCap which takes a video in the app that then generate a motion capture file that can be imported into Blender and link to the armature you have built for your model. I found this app to be super difficult to use and would not recommend in the future if you have the funds to use another software.

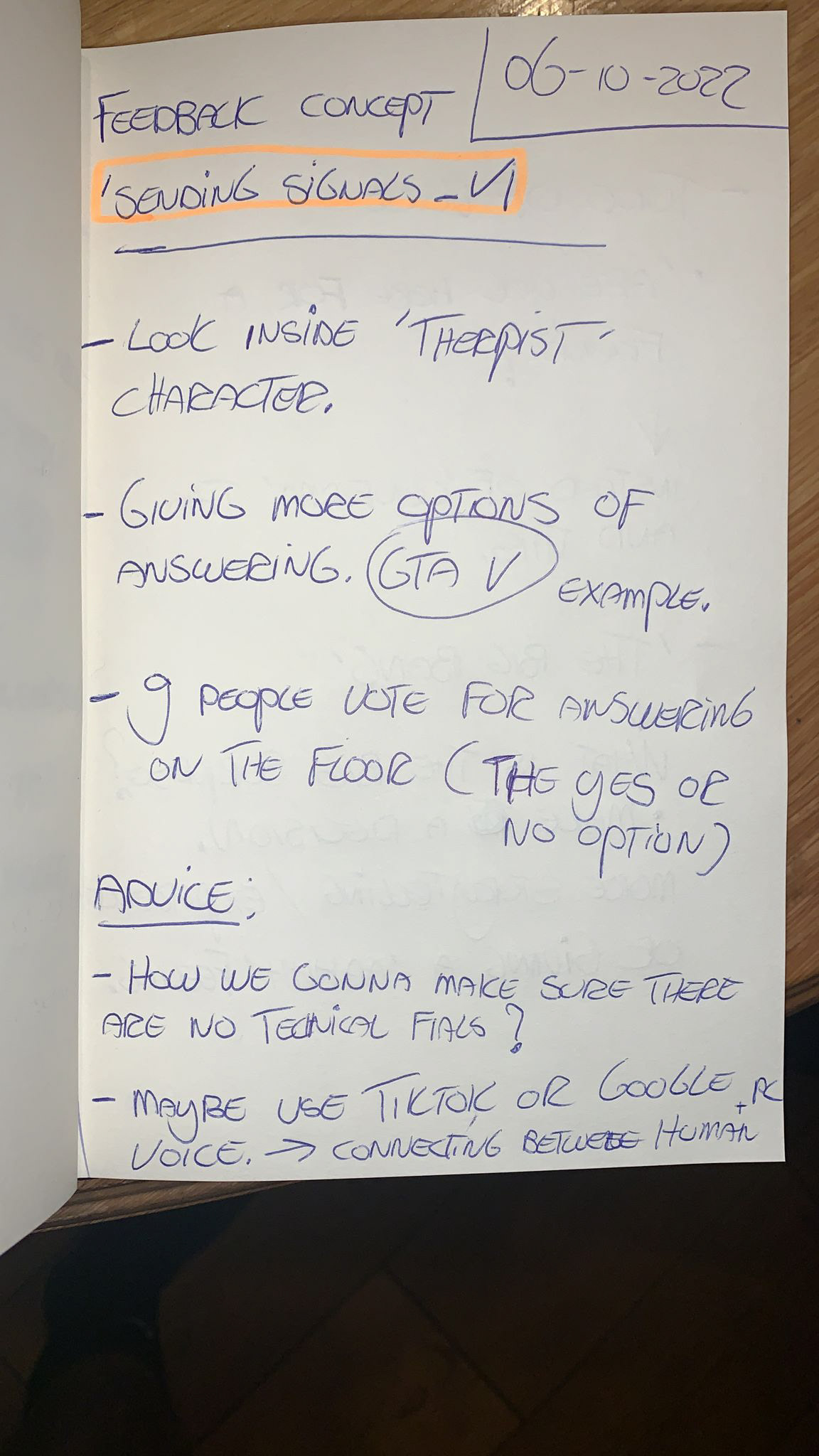

Presentation #1:

For our first presentation, we explained our concept to the class as well as the work we had done

I presented slides 7 to 9 and spoke about how I was learning to use Blender and motion capture that I had learned

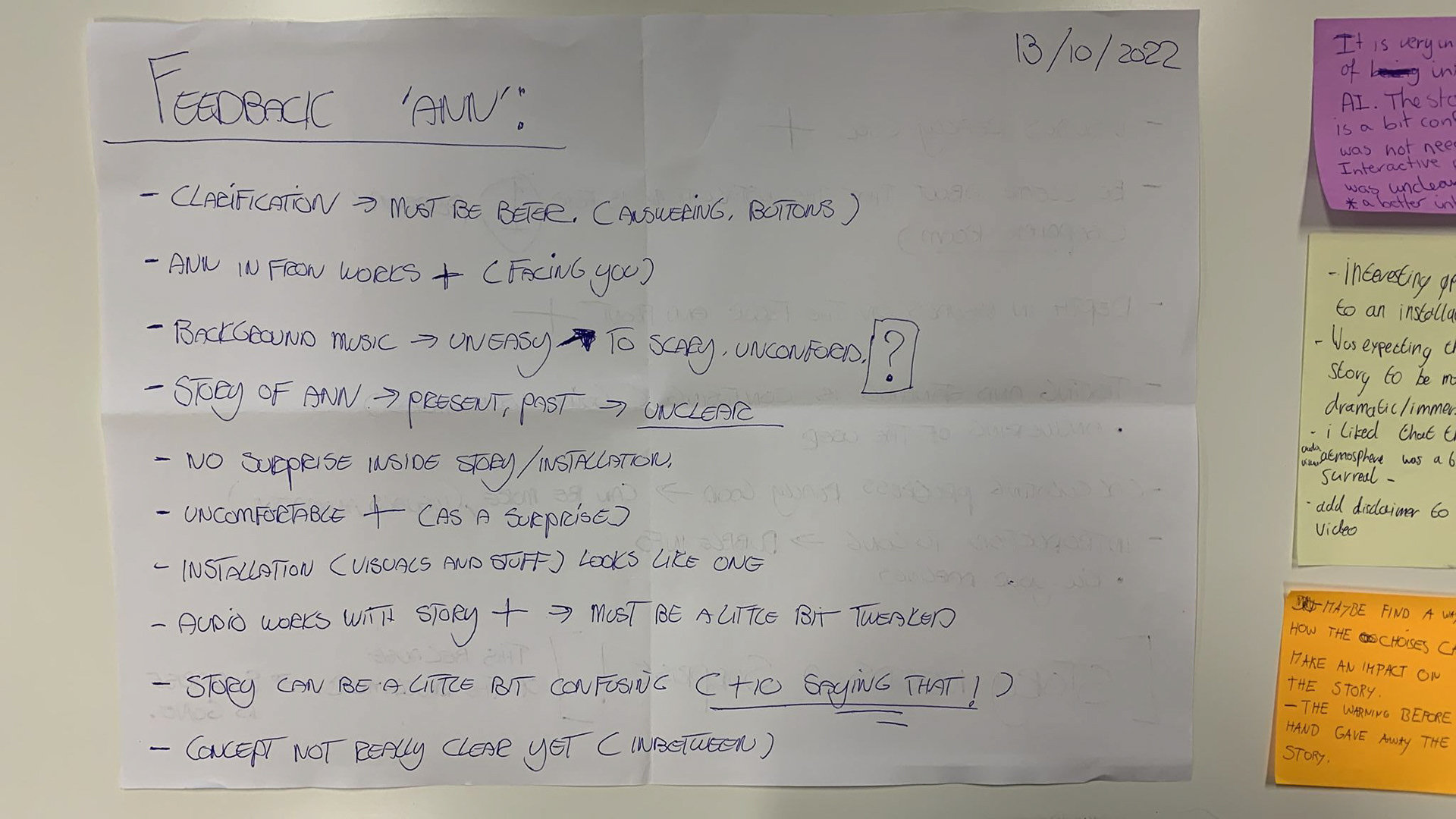

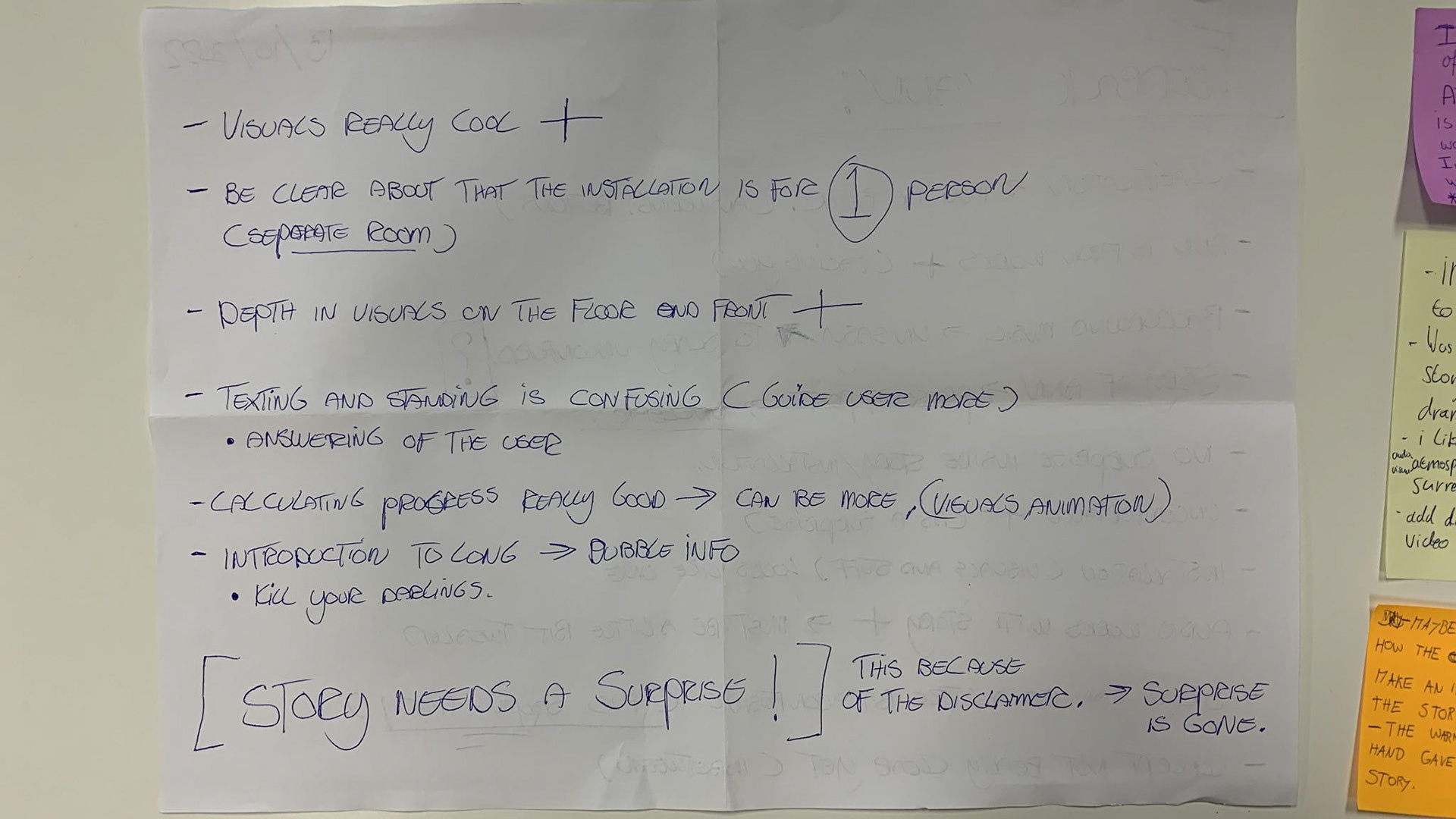

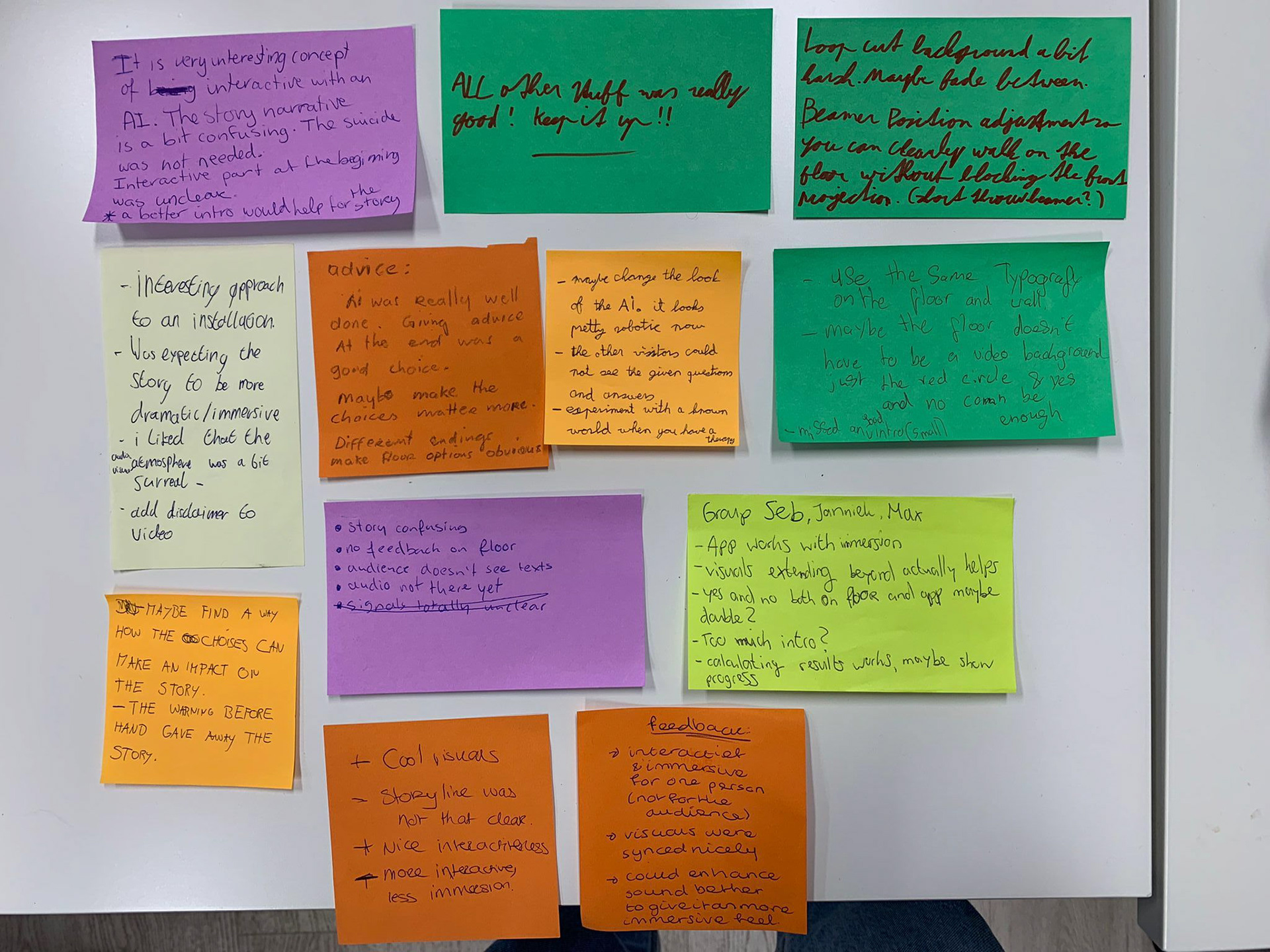

This is the feedback we received. From this feedback, we realised we needed to work on not confusing the audience with too many options for interaction. We decided to narrow it down to just the phone and floor

Week 2

I did a lot of research into the best and cheapest options for facial capture in Blender. The cheapest I could find was for free and involved putting dots all over my face and having a lot of knowledge in Blender. I didn't have the time or willpower to do that so I kept looking and found the next best thing. I then found iFacialMoCap. It worked by downloading a plug-in into Blender and downloading another app on your phone. You then link the app on your phone to Blender with the plug-in. It then showed the live movement of my face in Blender

The app worked amazingly in the editing process however when it came to rendering it, I came into huge problems. The two main ones were:

No definition for the face of ANN

ANN's face appeared to be doubled and looked really weird when rendered

The first one, the lack of definition, was actually easy enough to fix, we had to change the lighting source in Blender from a spotlight to a sun and it was able to not over expose the render and have the perfect amount of definition.

The second issue (the face doubling) however, we couldn't fix in time for the MidFi presentation

Here's what I did instead to fix this quickly so we could use ANN for our MidFi presentation. I changed the background to green and then screen recorded me saying my lines. I recorded the audio separately so the quality would be better

After this I took the video into premiere pro and used a colour key effect to select the green colour in the video and remove it to make all that area transparent. I then cropped the video so that it would only be the size of ANN's face, making it easier to render

I edited the edited video from above onto the background Max had designed and edited the rest of the installation in After Effects to add wifi connection, code, and loading symbols to show signals being received from the person. Max also created the animations for the floor.

Presentation #2 - MidFi:

Here is some feedback we got

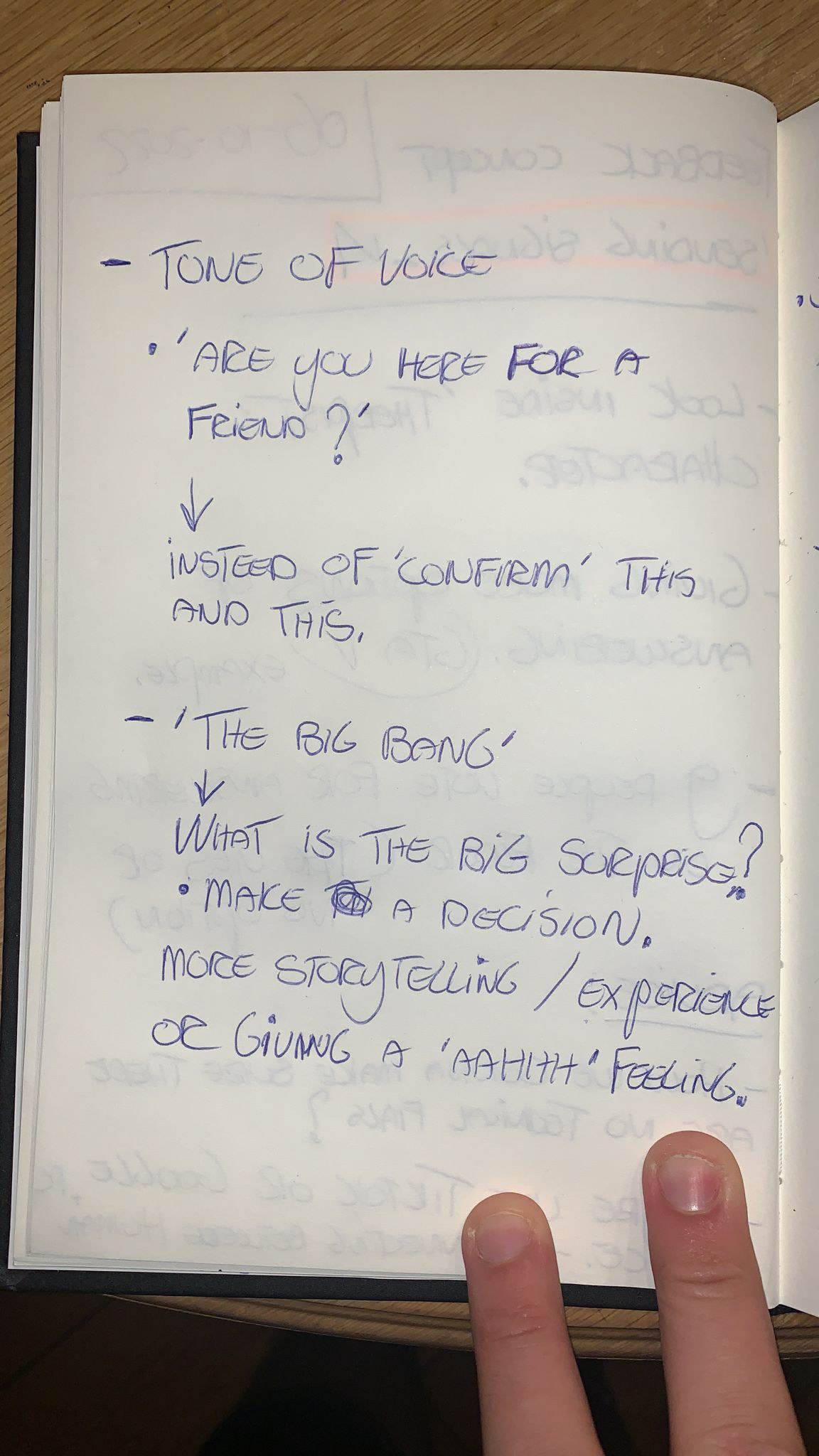

From this MidFi installation, we learnt that our narrative needs to improve. Our story needs a bigger reveal to build the immersion and cut out the build-up

Week 3

I helped Janniek a lot this week and came up with the new ending for the story. We changed the ending to create the "big bang/surprise" people were suggesting in the feedback from week 2. I made a copy of the script that I would find easier when it came to recording

When it came to recording the facial captures for ANN I made some mistakes and redid them in one take. We decided to not edit them out as it went with the concept we were creating and would create a sense of breadcrumbing in the storyline. It was also subtle enough that people had just thought we made a mistake in the editing when they first watched it and had not seen the end

We also decided to move the main screen so that it would touch the floor and add barriers on either side as in the previous feedback we were told the open sides were very distracting and took you out of the immersion

Sjoerd took over with the visuals of ANN to make her more life-like and fix the issues I was having with the renders. He was having issues with the rendering however and on the more of our HiFi presentation Max and I had to finish the rendering of ANN and editing of the overall video

We also had issues with TouchDesigner that we didn't have the week before when it came to mapping and had to change the way the audience interacted with the installation (sitting and pressing buttons on the floor instead of standing and moving) this actually worked out better as it created more of a feeling of being immersed

Presentation #3 - HiFi